[Dev Catch Up # 98] - Claude Opus 4.6, GPT 5.3 Codex, Qwen3-Coder-Next, Codex App, NanoClaw, PaperBanana,Vercel Lab's json-render, No Ads in Claude, git-rebase, Elevenlab's Skills,Xcode is now AI IDE!

Bringing devs up to speed on the latest dev news from the trends including, a bunch of exciting developments and articles

Welcome to the 98th edition of DevShorts, Dev Catch Up.

For those who joined recently or are reading Dev Catch Up for the first time, I write about developer stories and open source, partly based on my work and experience interacting with people all over the globe.

Thanks for reading Dev Shorts! Subscribe for free to receive new posts and support my work.

Some recent issues from Dev Catch up:

Join 8600+ developers to hear stories from Open source and technology.

Must Read

The coding model race is heating up. We have new releases from different providers this week. Anthropic announced Claude Opus 4.6. We all know Opus 4.5 already performs really well, and they call this new version the strongest model. They also shipped API updates like adaptive thinking, effort levels, and context compaction. Check the Anthropic Opus 4.6 post for details.

OpenAI has introduced GPT-5.3-Codex.. OpenAI says this model is built for agent style coding and long running tasks with tool use. They also mentioned that GPT-5.3-Codex helps build itself. Check the OpenAI GPT 5.3 Codex post for details.

Qwen announced Qwen3-Coder-Next. A new open weight model built for coding agents. It is tuned for long code sessions and agent loops. It has a big context window. If you are trying to run a coding agent on your own machine, this is worth a look. Check the Qwen3-Coder-Next for details.

Kling AI announced Kling 3.0, and it looks like a solid upgrade. It has improved video length and consistency. It also has image generation with 4K image output. It has native audio supporting multiple characters with multi-language. Check Kling AI announcement on Kling 3.0 for details.

OSS Highlight of the Week

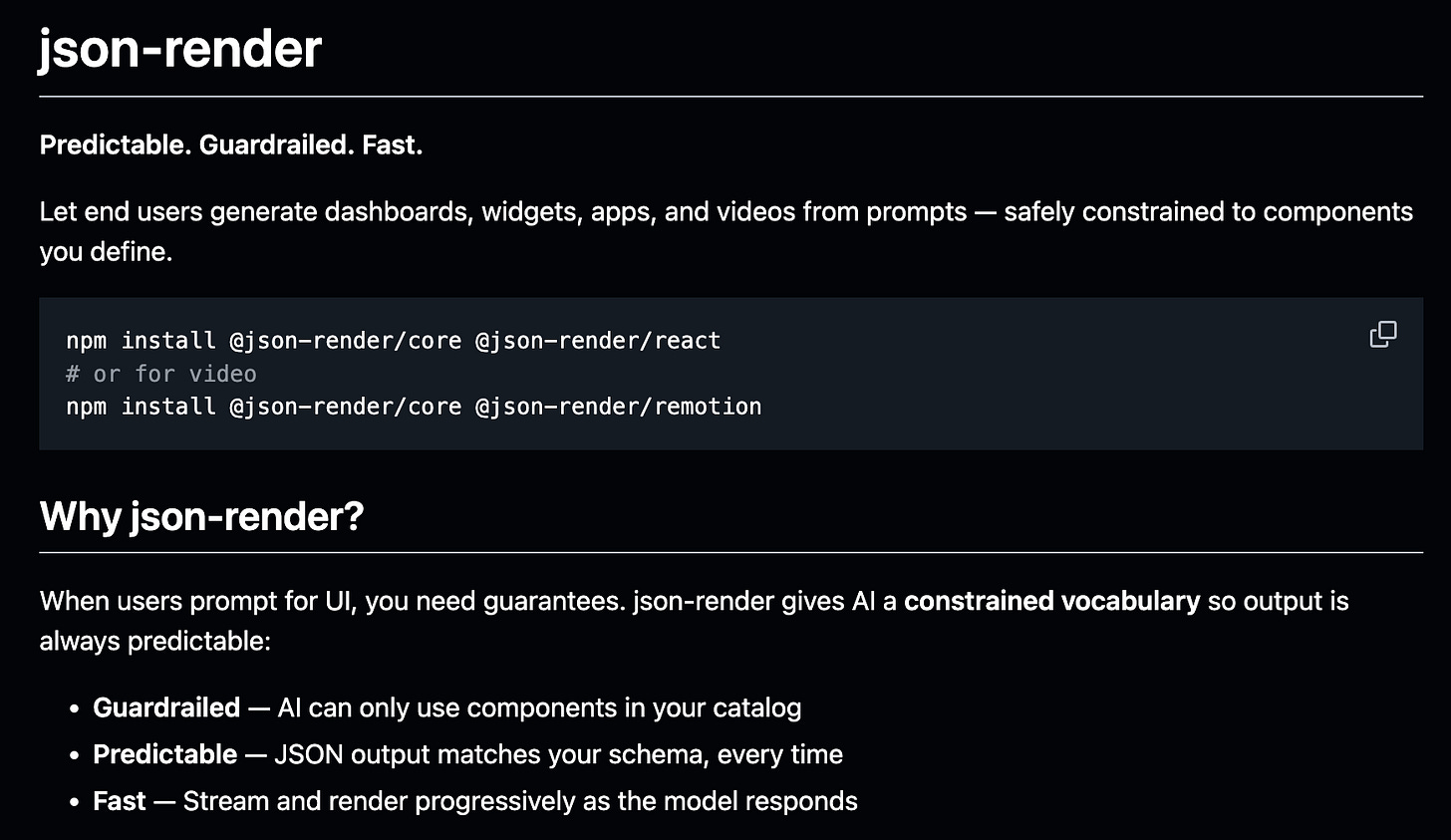

This week we are featuring json-render from Vercel Labs. It helps you build AI generated UI without letting the model output raw HTML. The model outputs a JSON layout using only the components you allow. Then json-render reads that JSON and renders the UI in your app. Check the json-render GitHub repo for details.

Good to know

PaperBanana is a research project for making clean academic figures. It uses a multi-agent pipeline to look at similar examples, picks a layout, keeps the style consistent, then generates the final illustration. Check PaperBanana for more details.

Every week we mention articles about API techniques and security basics. This ByteByteGo post talks about top authentication techniques. Another Substack post discusses 16 API concepts like versioning, rate limiting, and more. If you are working on backend, check these to know the API concepts.

Claude just took a clear stance on ads. They said ads are coming to AI, but not to Claude. It also reads like a pointed response to the recent talk around ad supported ChatGPT plans. Check Claude’s tweet on Ads on AI.

If git rebase still scares you, this post is for you. It explains what rebase does in plain words. It also shows a safe way to use it without breaking your branch history. Check the post on Git Rebase for the Terrified for details.

If you ever used OCR models and wondered how modern document extraction works, this post is a good read. It explains why classic OCR struggles with different templates and why teams move to vision LLMs. Check the post on building a vision LLM to know more details.

Notable FYIs

OpenAI introduced the Codex app. It lets you run multiple coding agents in parallel. It has built in support for worktrees, so agents can work on the same repo without conflicts. It supports Skills too. Check OpenAI’s Codex app announcement for more details.

OpenAI also introduced OpenAI Frontier. It is a platform to build and run AI agents for enterprise workflows, with permissions and controls. Check the OpenAI Frontier announcement for details.

ElevenLabs has released skills. It includes text to speech, speech to text, sound effects, and music generation skills. You can plug these into agents and build relevant applications faster. Check the ElevenLabs skills for details.

Last week we covered about OpenClaw. NanoClaw is a smaller version of the same idea. It runs in containers so you can keep things more isolated. It focuses on WhatsApp workflows, with features like memory, scheduling, and skills. Check the NanoClaw repo for details.

Apple just turned Xcode into an agent native IDE. It lets coding agents like Claude Agent and Codex work inside the project. Xcode now supports MCP, so other MCP compatible agents can plug into Xcode tooling too. Check the Apple Newsroom post for details.

That’s it from us with this edition. We hope you are going away with a ton of new information. Lastly, share this newsletter with your colleagues and pals if you find it valuable. A subscription to the newsletter will be awesome if you are reading it for the first time.