[Dev Catch Up #38] - Mixture-of-Transformers architecture, FrontierMath Benchmark, Advanced filtering in Next.js, Better Binary Quantization, and much more.

Bringing devs up to speed on the latest dev news from the trends including, a bunch of exciting developments and articles.

Welcome to the 38th edition of DevShorts, Dev Catch Up!

I write about developer stories and open source, partly from my work and experience interacting with people all over the globe.

Some recent issues from Dev Catch up:

Join 1000+ developers to hear stories from Open source and technology

Must Read

Training multi-modal models require larger datasets and computational resources than normal text-based LLMs. As a result, scaling becomes a challenging issue and to tackle such a challenge, Mixture of Transformers (MoT), a sparse multimodal architecture came into the picture that reduces computational costs. Learn more about it here.

Epoch AI very recently introduced FrontierMath, a benchmark that evaluates advanced mathematical reasoning in AI. This benchmark covers a whole range of computational intensive problems in number theory to algebraic geometry. Know more about this benchmark from this article.

The AI boom has helped everyone automate mundane, time-consuming tasks like summarizing large amounts of data, pattern matching, retrieving information from disparate sources, etc. This can be done with the help of AI agents and can actually help on-call engineers during team incidents. Here is a guided tutorial on creating an AI agent for incident response.

Adding an advanced filtering function in a Next.js application is essential because of its ability to filter out items based on different parameters. Here is an article that discusses managing search param filtering in the Next.js app router.

After this, shouting out a top open-source project is a delight and here it is:

OSS Highlight of the Week

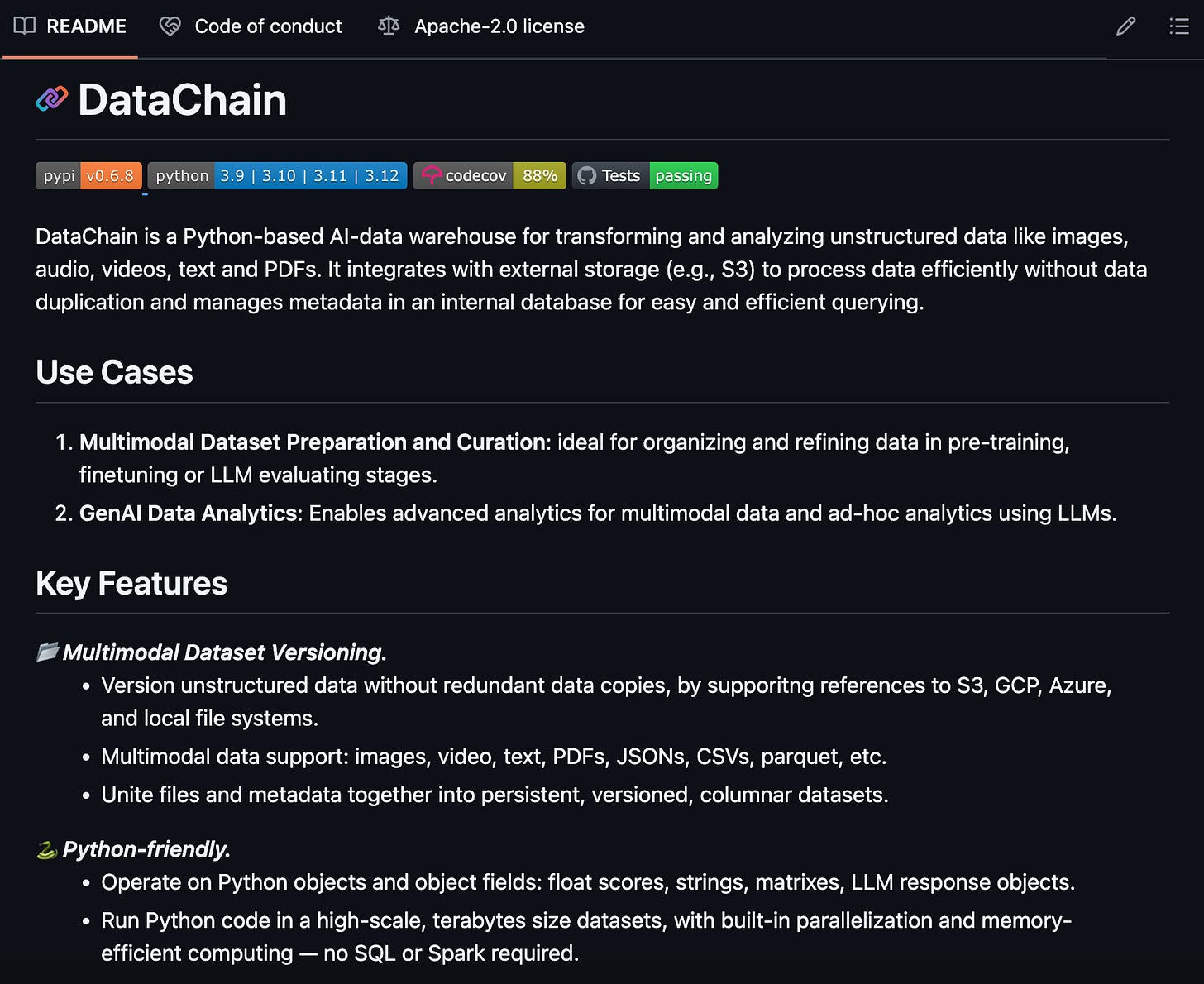

This week’s open-source project that has garnered quite a lot of attention is coming from Iterative.ai. Their python-based AI-data warehouse named DataChain helps transform and analyze unstructured data like images, texts, audio, PDFs, etc. Check it out from their GitHub page and leave a star to support it.

Now, we will head over to some of the news and articles that will be at a place of interest for developers and the tech community out there.

Hope you are enjoying this edition of our newsletter so far! Support us by giving a free follow to our LinkedIn and X pages.

Your support is highly appreciated!

Good to know

The evolution of AI is happening rapidly from having conversations and giving search results to getting work done and agents have been a primary part of it. To drive the continued excelling of AI’s agentic future, Microsoft dropped Magnetic-One, which is a generalist multi-agent system for solving complex tasks. More information about it can be found here.

Better Binary Quantization or BBQ is a leap in the approach of quantization and is advanced from its predecessors like Product and Scalar Quantization. It delivers approx. 95% memory reduction by reducing float32 dimensions to bits, while maintaining high ranking quality. This article tells you how it is helpful for Lucene and Elasticsearch.

Headless wordpress is the new future for content management systems and to top it with a Next.js frontend will give a highly responsive UI with an easily-managed backend. Here is a guided tutorial on building and deploying the same.

Choosing the right indexes can make a huge difference in performance in the world of Postgres. Index in Postgres is like that one present at the back of the book and since Postgres reads data by scanning every row, it is highly important. This article discusses in detail on choosing the right index for Postgres.

Code security is a must even for hardened environments with almost impenetrable infrastructure because attackers can exploit vulnerabilities in source code. Here is an article that highlights the importance of it with an example on a Node.js application.

Lastly, we will take a look at some of the trending scoops that hold a special mention for the community.

Notable FYIs

The rise in the development of AI agents is note-worthy with the vertical agents platform leading the hype. Here is a podcast with Stanislas Polu that talks about the development of the horizontal agents platform and his competition with LangChain.

Submitting a form in React with a Server Action leads to the resetting of the form after the execution of the Server Action. The reset can either happen automatically or manually depending on the usage of the framework on top of react. This tutorial shows how to keep the form state intact after the execution of the Server Action.

Agent architectures promise that AI can become more capable by coordinating simpler tasks that can marshal resources and incrementally work towards solution instead of relying on clever prompting, fine-tuning, and scaling of LLMs. Here is an article that shows the development of a RAG context refinement agent demonstrating the above.

Open-source AI models have contributed largely towards the advancement of AI by enabling all types of developers to build solutions on top of it. This article discusses the best open-source models available for developers for free use.

Fine-tuning refers to the further training of a pre-trained model. Read this article to get a brief summary of language model finetuning.

That’s it from us with this edition. We hope you are going away with a ton of new information. Lastly, share this newsletter with your colleagues and pals if you find it valuable and a subscription to the newsletter will be awesome if you are reading for the first time.